Deployment patterns

Distributed patterns for running containers on Kubernetes

Contents taken from the book: https://www.amazon.com/Designing-Distributed-Systems-Patterns-Paradigms/dp/1491983647

Single node patterns

Patterns for grouping collections of containers that are scheduled on the same machine. These groups are tightly coupled, symbiotic systems. They depend on local, shared resources like disk, network interface, or inter-process communications.

The sidecar patterns

The sidecar pattern is a single-node pattern made up of two containers. The first is the application container. The second is the sidecar container.

- Sidecar is to augment and improve the application container, often without the application container’s knowledge. Adapt legacy applications where you no longer wanted to make modifications to the original source code

- Sidecar containers are coscheduled onto the same machine via an atomic container

group, such as the

podAPI object in Kubernetes

Usecase

- Adding HTTPS to a Legacy Service

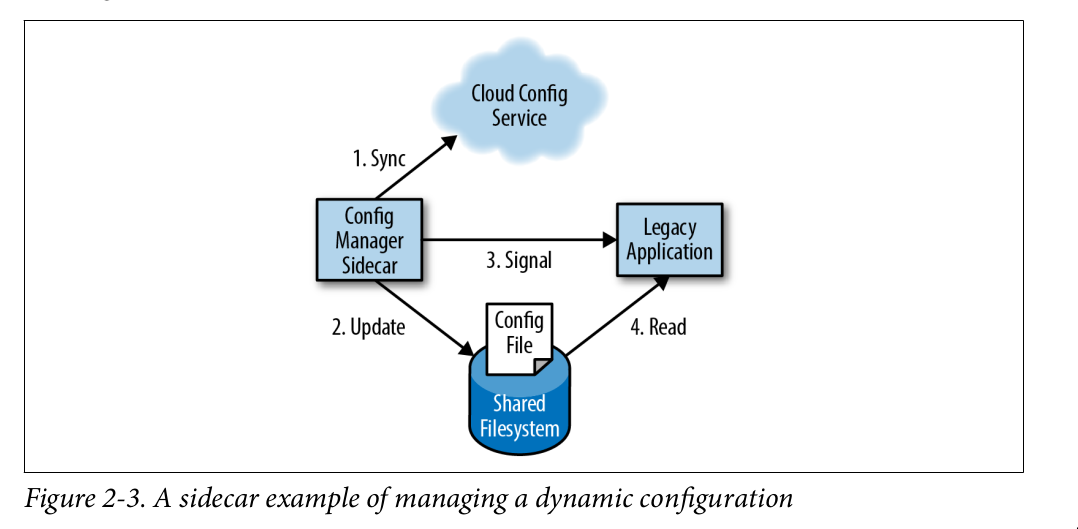

- Dynamic Configuration with Sidecars

Ambassador patterns

The application container is connected to ambassador container so that the ambassador container can do some routing logic on be half of the application container

Using ambassador to to Shard a Service

Adapting an existing service to talk to a sharded service that exists somewhere in the world

You can introduce an ambassador container that contains all of the logic needed to route requests to the appropriate storage shard

Using an Ambassador to Do Experimentation or Request

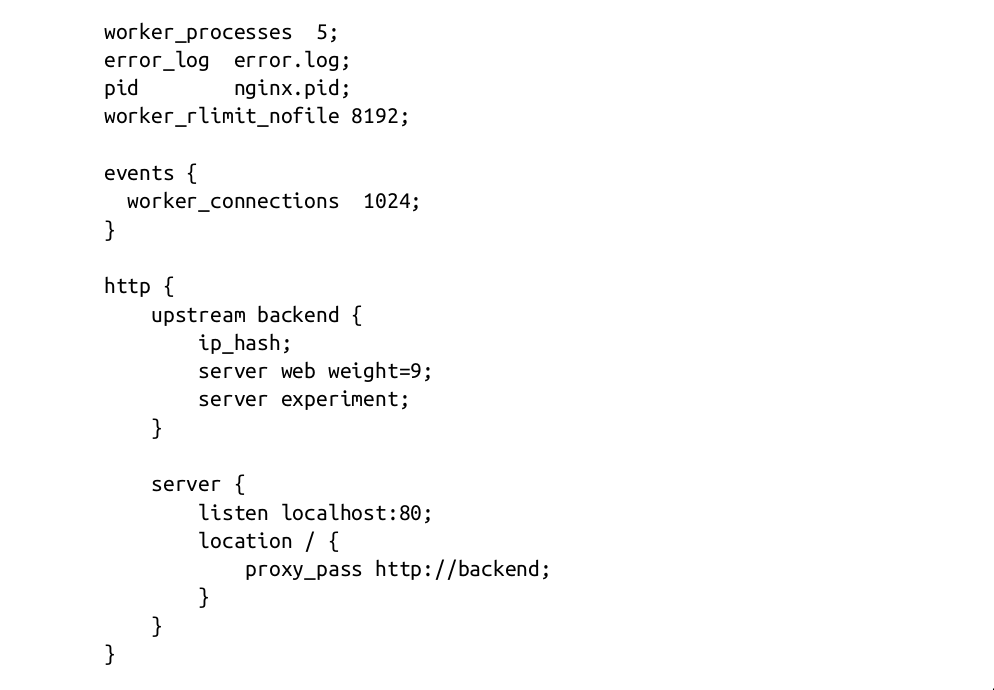

Implementing 10% Experiments

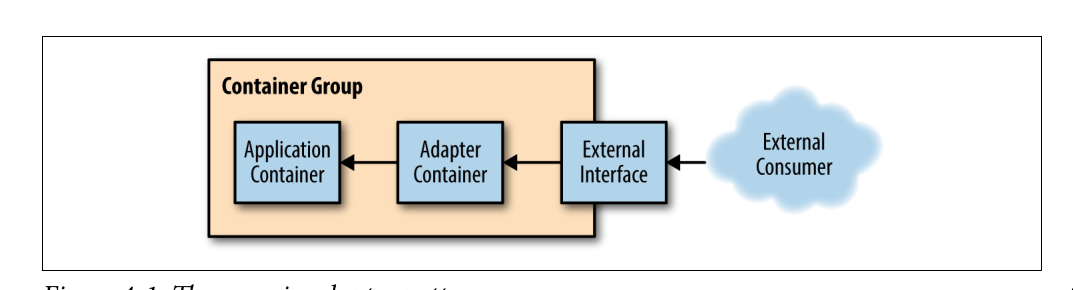

Adapter patterns

Adapter container is used to modify the interface of the application container so that it conforms to some predefined interface that is expected of all applications

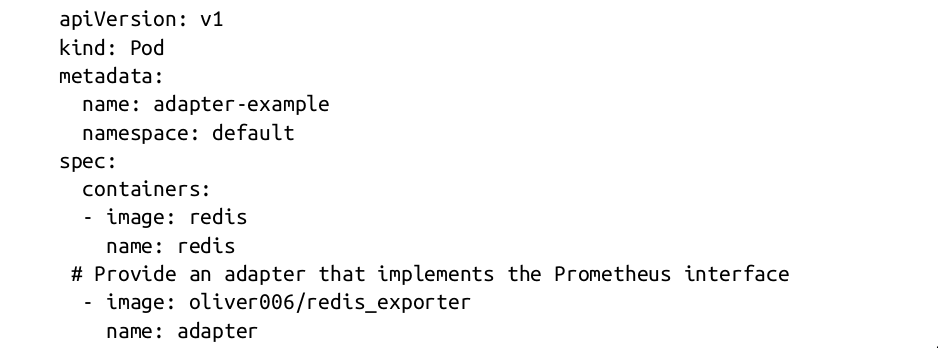

Using Prometheus for Monitoring

To collect metrics from a variety of different systems, Prometheus expects every con‐ tainer to expose a specific metrics API

However, many popular programs, such as the Redis key-value store, do not export

metrics in a format that is compatible with Prometheus. The adapter

pattern is quite useful for taking an existing service like Redis and adapting it to the

Prometheus metrics-collection interface.

However, if we simply add an adapter container (in this case, an open source Prometheus exporter), we can modify this pod to export the correct interface and thus adapt it to fit Prometheus’s expectations

Normalizing Different Logging Formats with Fluentd

we use the adapter pattern with a redis container as the main application container, and the fluentd container as our adapter container. In this case, we will also use the fluent-plugin-redis-slowlog fluentd plugin to listen to the slow queries. We can configure this plugin using the following snippet:

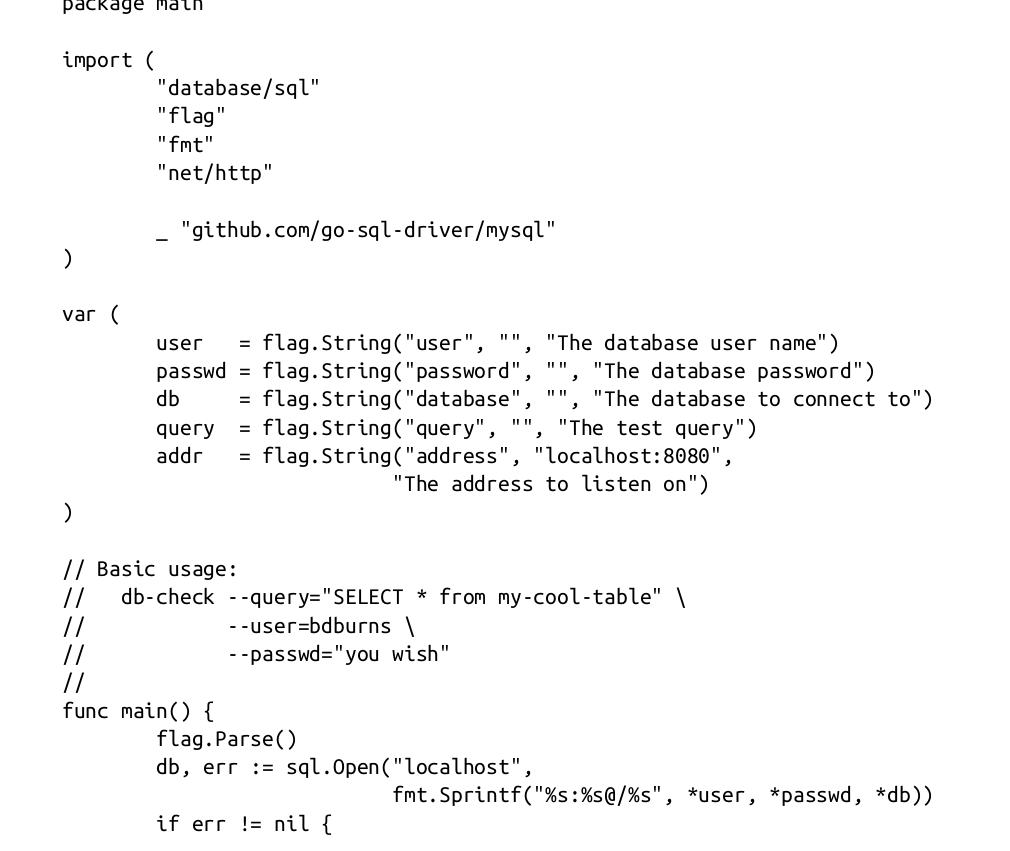

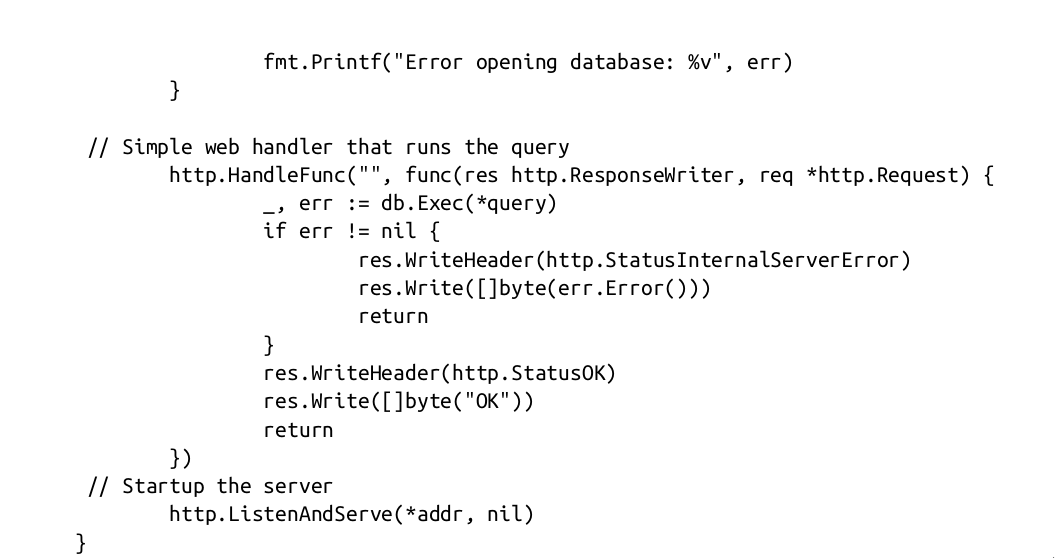

Adding Rich health monitoring with MySQL

Suppose then that you want to add deep monitoring on a MySQL database where you actually run a query that was representative of your workload

Serving patterns

Microservices

There are numerous benefits to the microservices approach, most of them are centered around reliability and agility. But there are also downsides to the microservices approach:

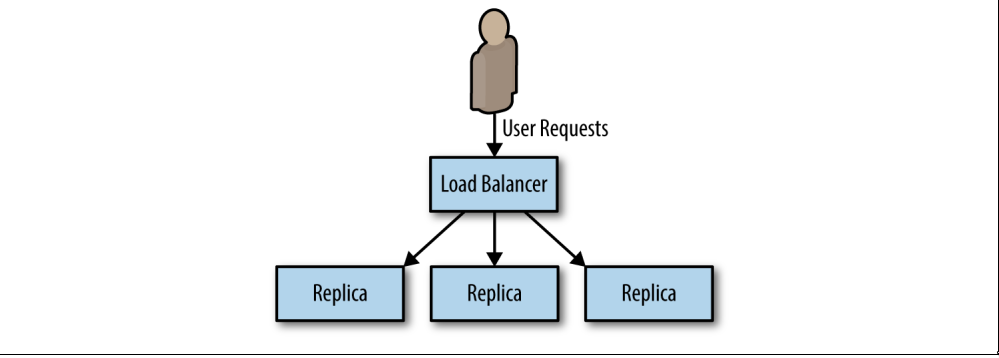

Replicated Load balanced Services

In a replicated load-balanced service, every server is identical to every other server and all are capable of supporting traffic.

Stateless Services

Stateless services are ones that don't require saved state to operate correctly.

Readiness Probes for Load Balancing

health probes can be used by a container orchestration system to determine when an application needs to be restarted

readiness probe determines when an application is ready to serve user requests

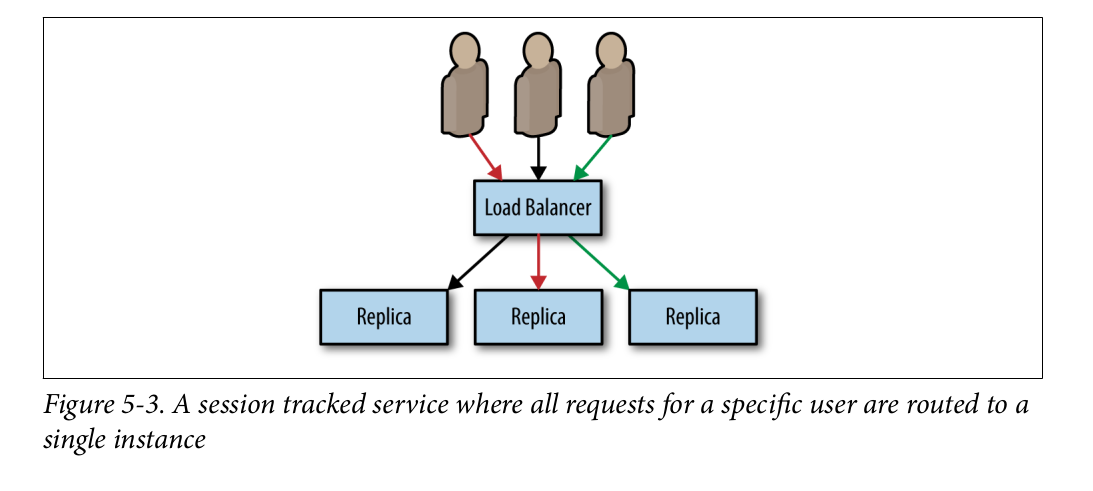

Session Tracked Services

Often there are reasons for wanting to ensure that a particular user’s requests always end up on the same machine. Sometimes this is because you are caching that user’s data in memory, so landing on the same machine ensures a higher cache hit rate so some amount of state is maintained between requests

Session tracking is accomplished via a consistent hashing function.

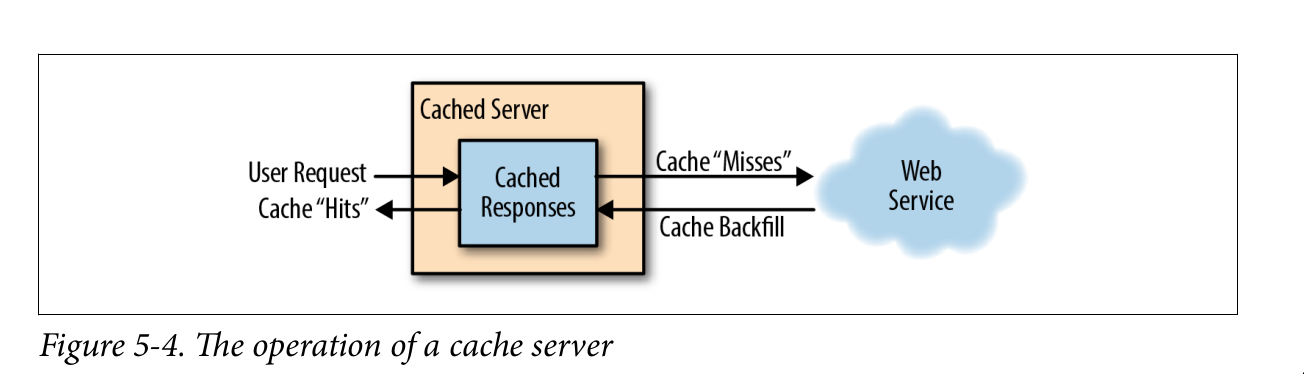

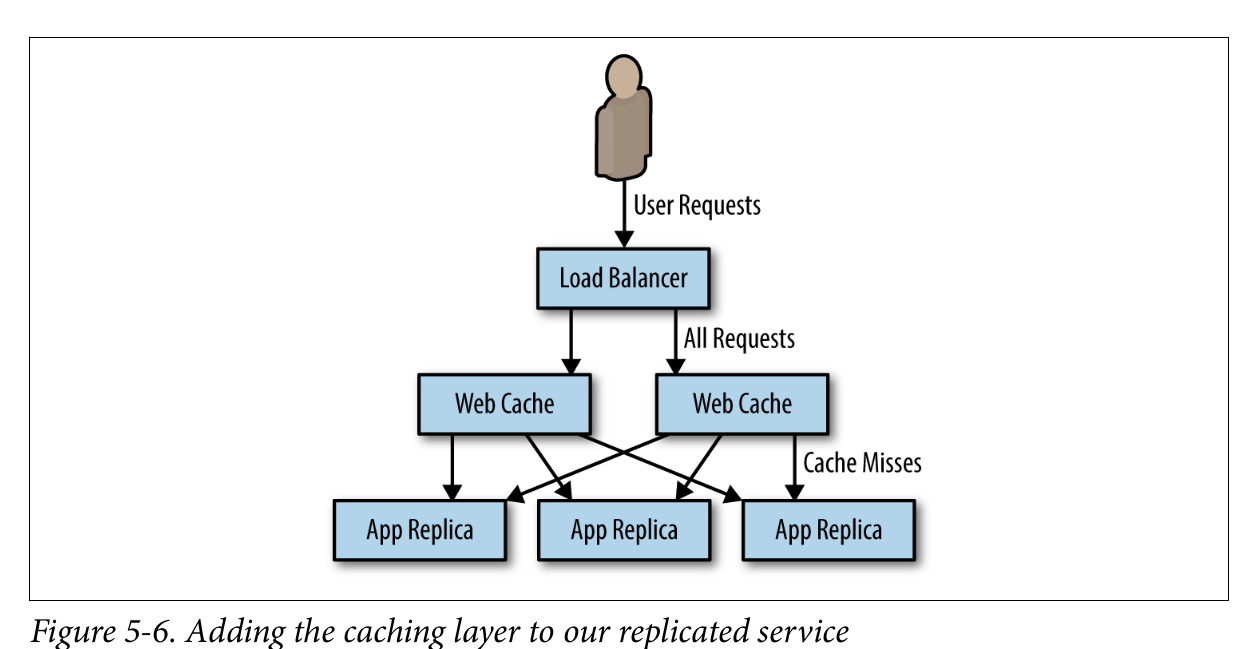

Introducing a Caching Layer

Sometimes the code in your stateless service is still expensive despite being stateless. It might make queries to a database to service requests or do a significant amount of rendering or data mixing to service the request. In such case, a caching layer can make a great deal of sense

Caching proxy is simply an HTTP server that maintains user requests in

memory state. If two users request the same web page, only one request will go to

your backend; the other will be serviced out of memory in the cache

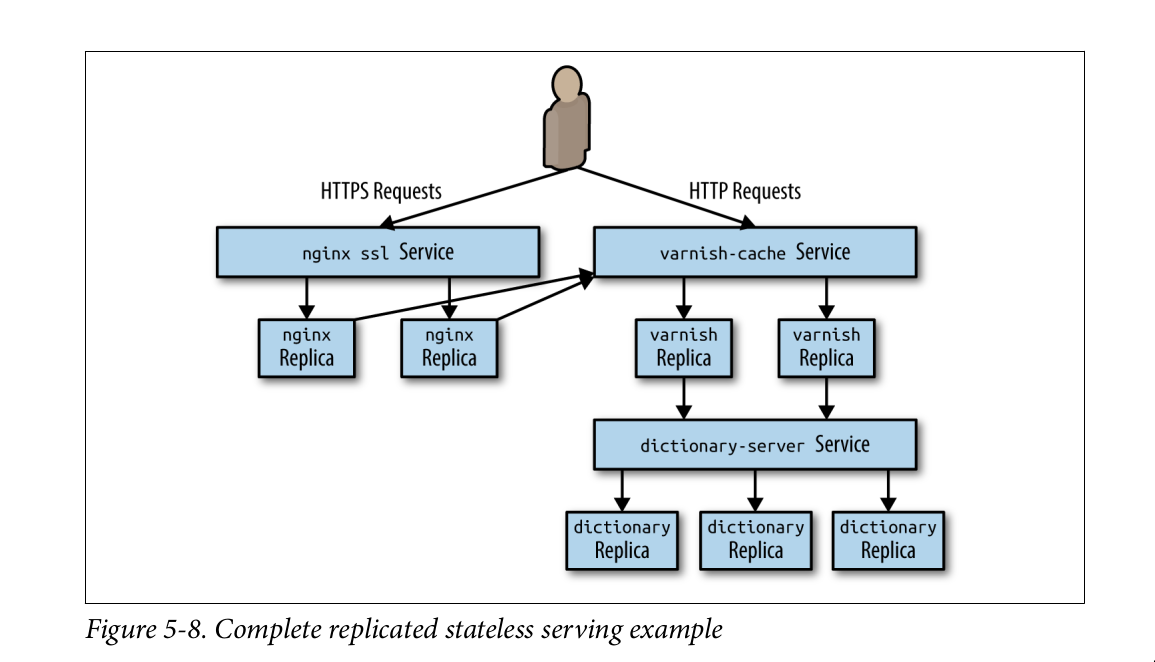

or our purposes, we will use Varnish, an open source web cache.

Rate Limiting and Denial-of-Service Defense

When a user hits the rate limit, the server will return the 429 error code indicating

that too many requests have been issued. However, many users want to understand

how many requests they have left before hitting that limit. For that we want to populate an HTTP Header with the remaining-calls information X-RateLimit-Remaining

SSL Termination

Sharded service

Sharded services are generally used for building stateful services. The primary reason for sharding the data is because the size of the state is too large to be served by a single machine.