Database caching strategy

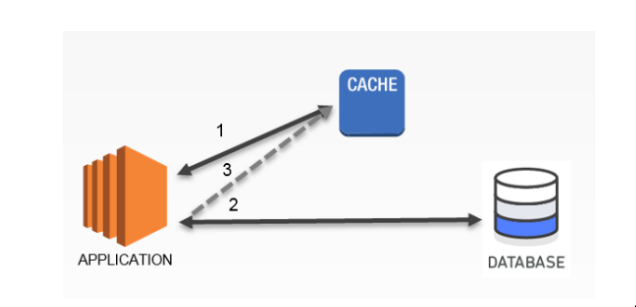

1. Cache-Aside (Lazy loading)

- When you application needs to read data from database, it checks the cache first to determine whether the data is available

- If the data is available, the cached is returned, and the response is issued to the caller

- If the data isn't available. Query from database. The cache is then populated with the data that is retrived from the database, and the data is returned to the caller

Advantages:

- The cache contains only data that the application requests, which helps keep the cache size cost effective

- Implementation is easy, straightforward

Disadvanges:

- The data is loaded into the cache only after a cache miss, some overhead is added to the initial response time because additional roundtrips to the cache and database are needed

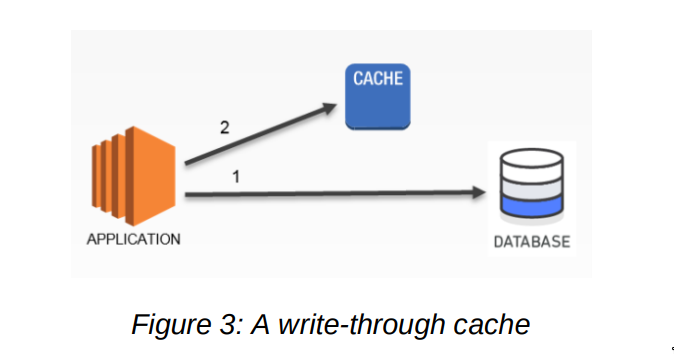

2. Write - through

- application batch or backend process updates the primary database

- Immediately afterward, the data is also updated in the cache

Advantages:

- Because cache is up-to-date with primary database. There is a much greater likelihood that the data will be found in the cache. This in turn results in better overall application performance and user experience

- The performance of your database is optimal because fewer database reads are performed

Disadvantages:

- Infrequent requested data is also writen to the cache, resulting in a larger and more expensive cache

A proper caching strategy includes effective use of both write-through and lazy-loading of your data and setting an approriate expiration for the data to keep it relevant and lean

TTL

- When apply TTLs to your cache keys. you should add some time jitter to your TTL. This reduces the possiblity of heavy load occurring when your cached data expires Take, for example, the scenario of caching product information. If all your product data expires at the same time and your application is under heavy load, then your backend database has to fulfill all the product request that could generate too much pressure on your database, resulting in poor performance. By adding slight jitter to your TTLs, a randomly generated time value (e.g., TTL = your initial TTL value in seconds + jitter)

Evictions

- Evictions occur when cache memory is overfilled or is greater than the maxmemory setting for the cache, causing the engine selecting keys to evict in order to manage its memory

References

https://d0.awsstatic.com/whitepapers/Database/database-caching-strategies-using-redis.pdf