Interprocess communication

Interaction styles

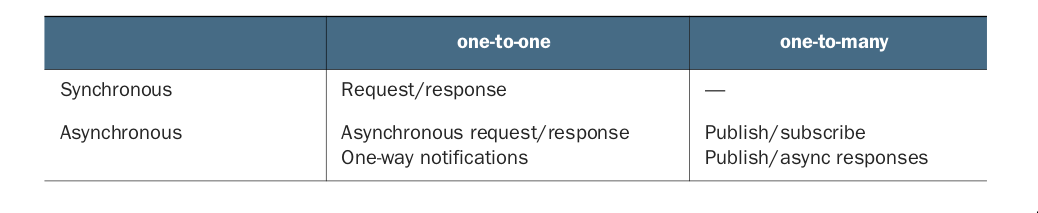

There are a variety of client-side interaction styles that can be organized into two demensions:

The first demension is whether the interaction is

one-to-oneorone-to-many- One-to-one: Each client is processed exactly by one service

- One-to-many: Each request is processed by multiple services

The second demension is whether the interactoin is synchronous or asynchronous

- Synchronous: The client expects a timely response from the service and might even block while it waits

- Asynchronous: The client doesn't block, and the response, if any, isn't neccessarily sent immediately

Defining APIs in a microservice architecture

Regardless of which IP mechanism you choose, it’s important to precisely define a service’s API www.programmableweb.com/news/how-to-design-great-apis-api-first-design-and-raml/how-to/2015/07/10 First you write the interface definition. Then you review the interface definition with the client developers. Only after iterating on the API definition do you then implement the service.

Evolving APIs

Use semantic versioning

- MAJOR: when you make an incompatible change to the API. Because you can't force clients to upgrade immediately, a serivce must simultaneously support old and new versions of an API for some period of time. For example, version 1 paths are prefixed with

/v1/..., and version 2 paths with/v2/.... - MINOR: When you make backward-compatible enhancements to the API.

- Adding optional attributes to request

- Adding attributes to a response

- Adding new operations

- Services should provide

default valuesfor missing request attributes

- PATCH: When you make a backward-compatible bug fix

Message format

Text based formats: XML and JSON

- Advantage: Are humanreadable and self describing

- Downside: the messages tend to be verbose. Overhead of parsing text, especially when messages are large.

Binary message formats: Protocol Buffer If efficiency and performance are important, you may want to consider using a binary format

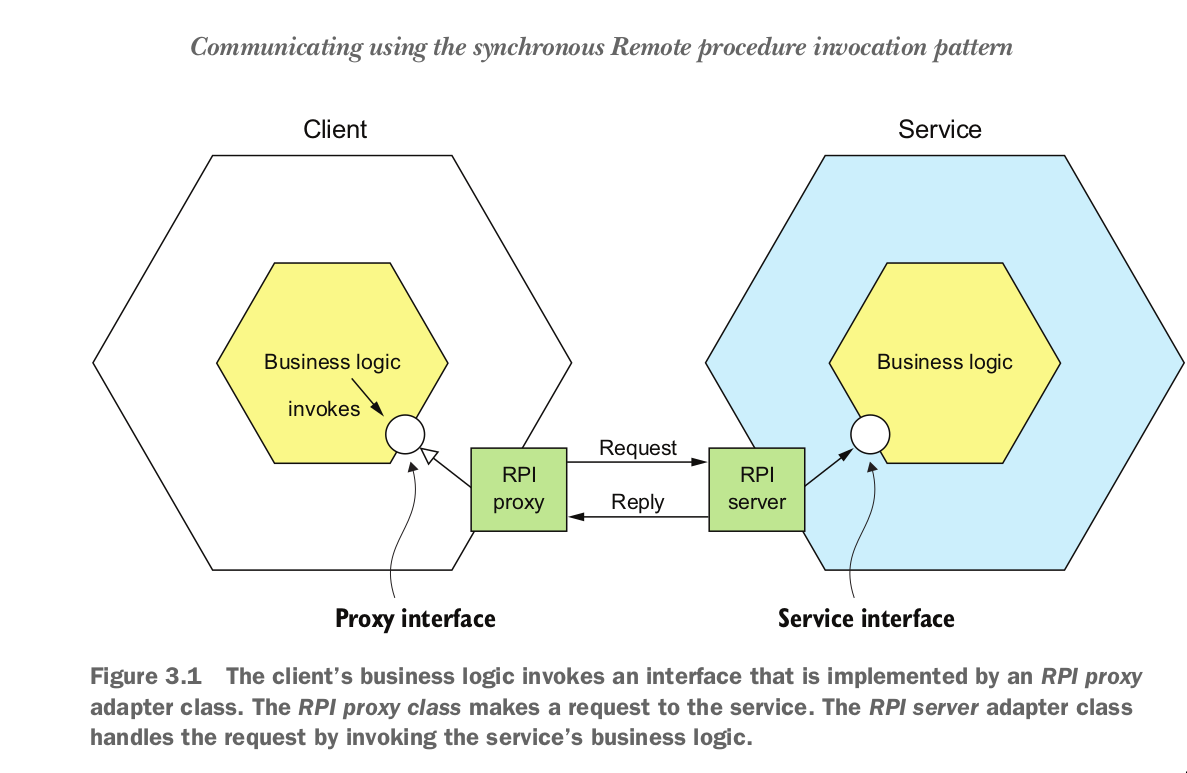

Communicating using the synchronous Remoteprocedure invocation pattern

REST

A key concept in REST is a resource, which typically represent a business object. REST use HTTP verbs for manipulating resources.

The challenge of fetching multiple resources in a single request

imagine that a REST client wanted to retrieve an Order and the Order 's Consumer . A pure REST API would require the client to make at least two requests, one for the Order and another for its Consumer . A more complex scenario would require even more round-trips and suffer from excessive latency.

We can implement

We can use altenatives such as GraphQL

The challenge of mapping operations to HTTP Verbs

A rest API should use PUT for updates. But there are multiple ways to update an order, including canceling it, revising the order. One solution is to define a sub-resource for updating a

particular aspect of a resource. The Order Service , for example, has a

Benefit and drawbacks

Benefits:

- It's simple and familiar

- You can test an HTTP API from within a browser using, for example, the Post- man plugin, or from the command line using curl

- It directly supports request/response style communication.

Drawbacks:

- only supports the request/response style of communication.

- Reduced availability. Because the client and service communicate directly with- out an intermediary to buffer messages, they must both be running for the duration of the exchange.

- Fetching multiple resources in a single request is challenging

gRPC

Benefits:

- It’s straightforward to design an API that has a rich set of update operations

- It has an efficient, compact IPC mechanism, especially when exchanging large messages.

- Bidirectional streaming enables both RPI and messaging styles of communication.

Drawbacks:

- It takes more work for JavaScript clients to consume gRPC-based API than REST/JSON-based APIs.

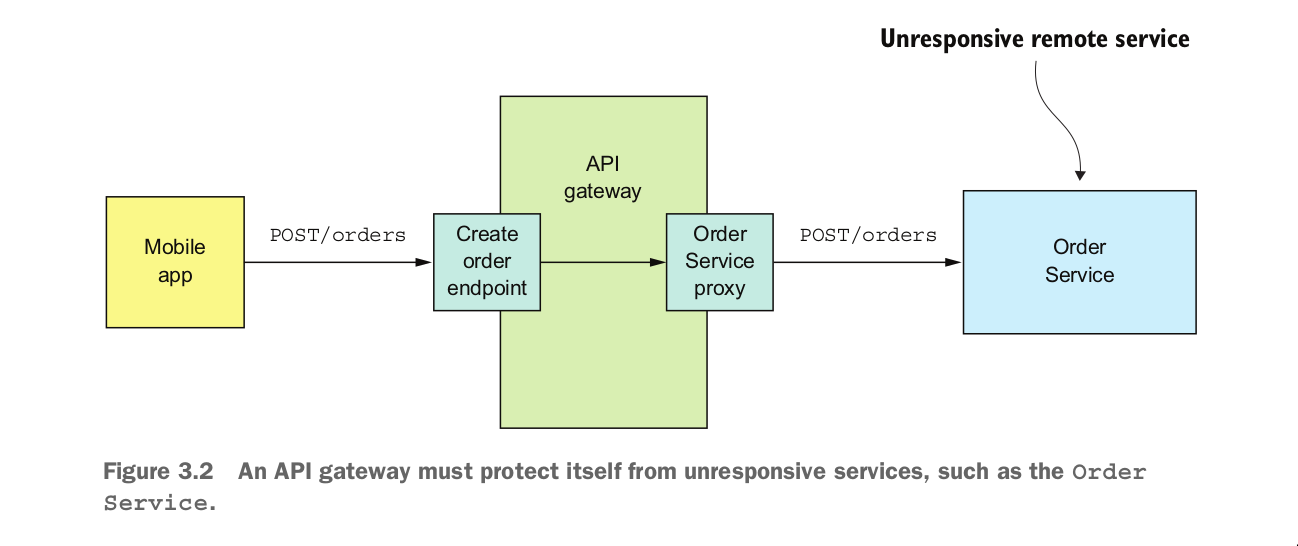

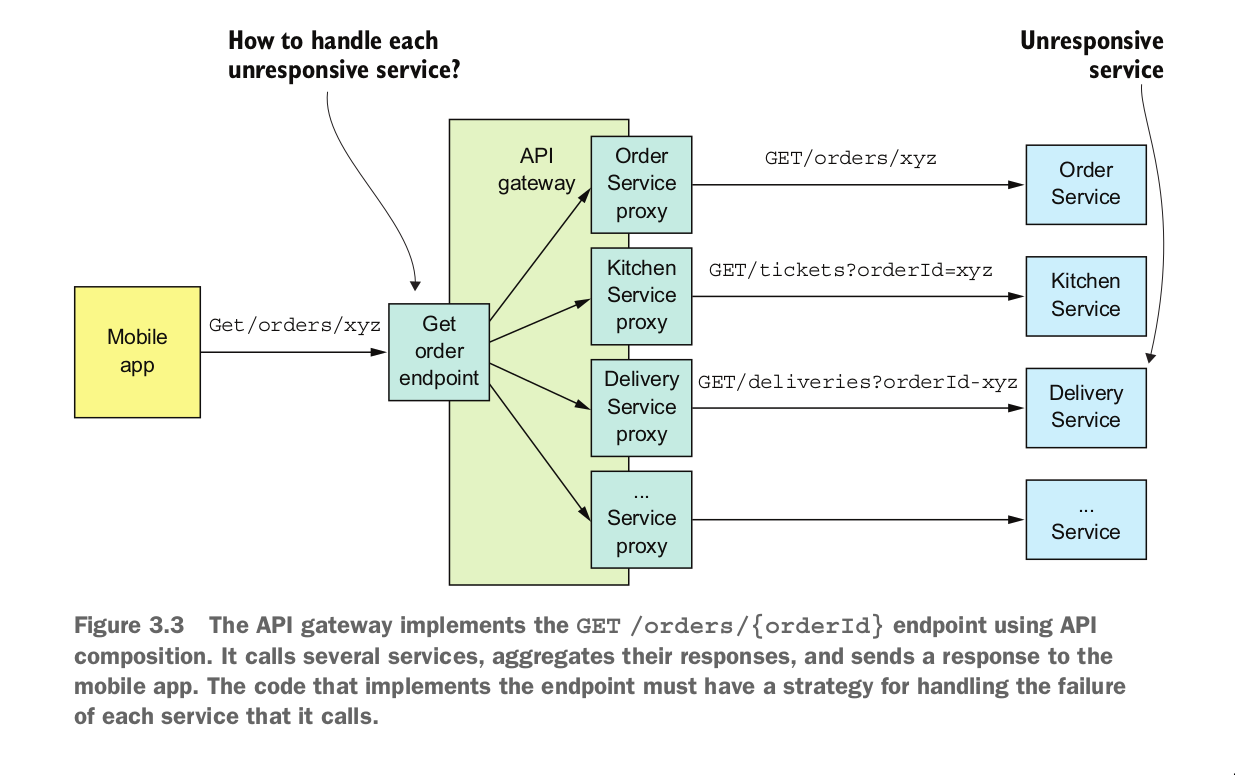

Handling partial failure using the Circuit breaker pattern

An RPI proxy that immediately rejects invocations for a timeout period after the num- ber of consecutive failures exceeds a specified threshold.

A naive implementation of the OrderServiceProxy would block indefinitely, waiting for a response. Not only would that result in a poor user experience, but in many applications it would consume a precious resource, such as a thread

You need to decide how to recover from a failed remote service

Developing robust RPI proxies

- Network timeout: Never block indefinitely and always use timeouts when waiting for a response

- Limiting the number of outstanding requests from a client to a service: If the limit has been reached, it’s probably pointless to make additional requests, and those attempts should fail immediately.

- Circuit breaker pattern: Track the number of successful and failed requests, and if the error rate exceeds some threshold, trip the circuit breaker so that further attempts fail immediately. After a timeout period, the client should try again, and, if successful, close the circuit breaker.

Recovering from an available service

Returning a fallback value such as either a default value or a cached response from an unavailable service.

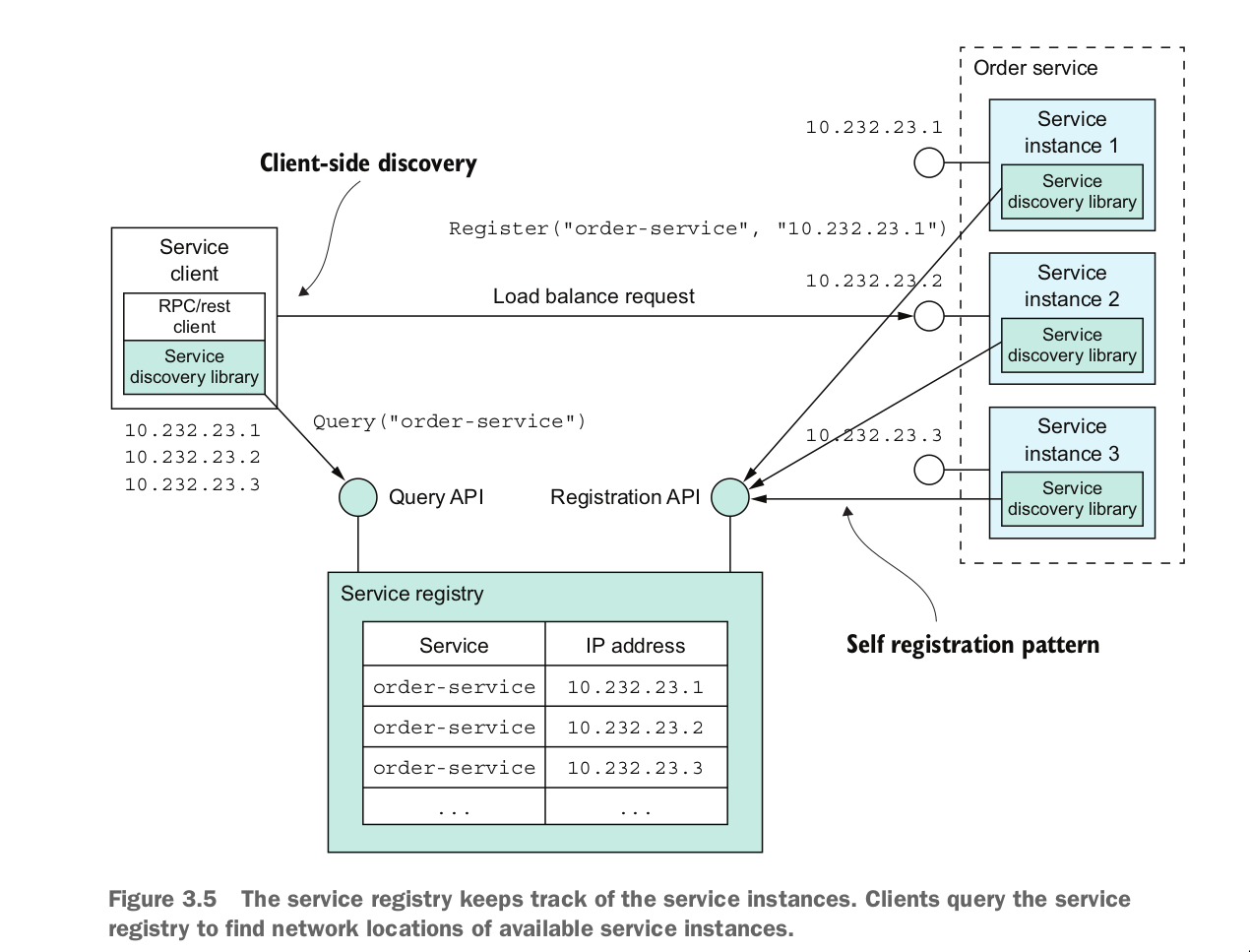

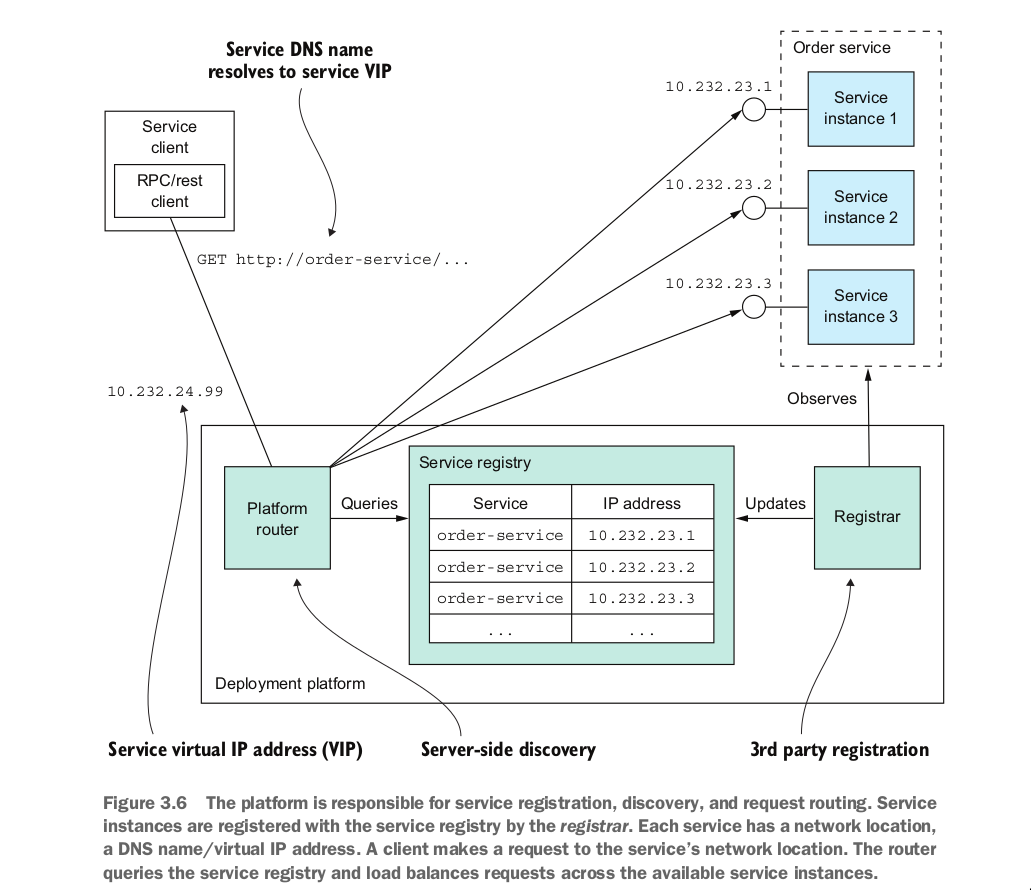

Service discovery

Application-level service discovery

Platform-provided service discovery

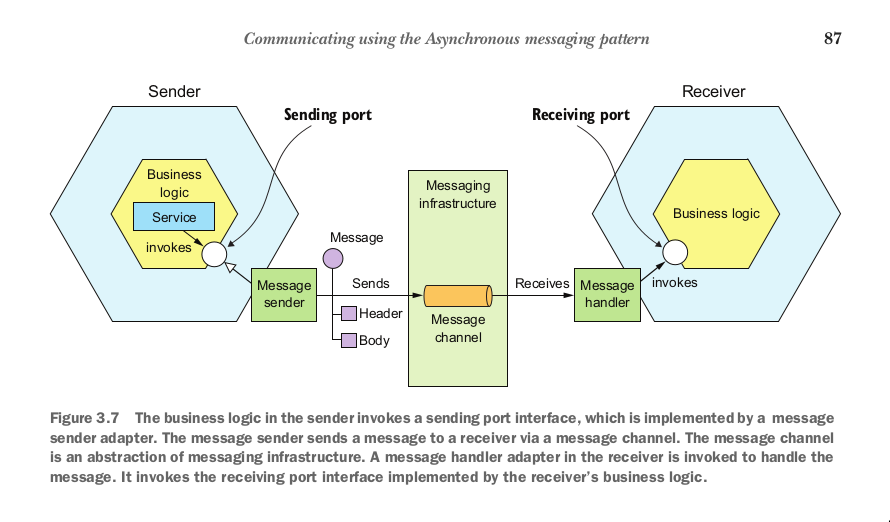

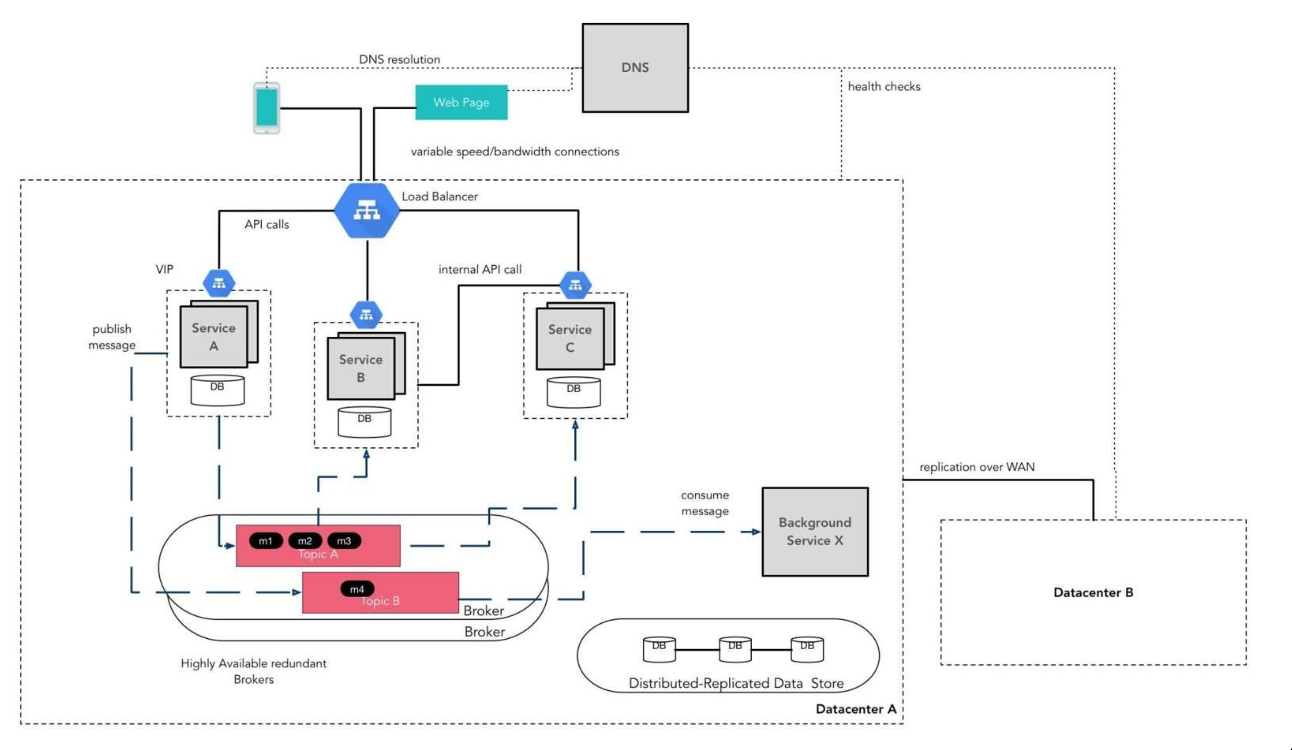

Communicating using the Asynchronous messaging pattern

Services communicate by asynchronously exchanging messages. A messaing-based application typically uses a message broker, which acts as an which acts as an intermediary between the services

Important topics:

- criteria for selecting a message broker

- scaling consumers while preserving message ordering

- detecting and dsicarding duplicate messages

- sending and receiving messages as part of a database transaction

Message

- A message consists of

a headeranda message body- The header contains metadata that describes the data being sent. Unique message

id generatedby either the sender or messaging infrastructure - The message body is the data being sent, there are several different kinds of messages:

- Document: A generic message that contains only data

- Command: A message that's the equivalent of an RPC request. Speicifies the operation to invoke and its parameters

- Event: A message indicating that someting notable has occured in the sender

- The header contains metadata that describes the data being sent. Unique message

Message channels

There are two kinds of channel:

There are two kinds of channel:

- Point-to-point: Channel delivers a message to exactly one of the consumers that is reading from the channel. For example, a command message is often sent over a point-to-point channel

- Publish-subscribe: Channel delivers each message to all of attached consumers. Services use publish-subscribe channels for the one-to-many interaction. For example, an

eventmessage is usually sent over a publish-subscribe channel

Implemeting interaction sytles using messaging

Synchronous request/response

Using a message broker

This paradigm is much more loosely-coupled and scalable due to the following:

- The message producers don't need to know about the consumers

- The consumers don't need to be up when the producers are producing the message

Drawbacks

- Brokers become critical failure points for the system

- The communication is more difficult to change/extend compare to HTTP/JSON.

Brokerless messaging

Services can exchange messages directly. ZeroMQ, NSQ(golang) is a popular brokerless messaging technology.

There is clear segregation between connected services. The only address a producer needs to know is that of the Broker.

Benefits:

- Allow lighter network traffic and better latency, because mesages go directly from the sender to the receiver

- Eliminates the possibility of the message broker being a performance bottleneck or a single point of failure

Drawbacks:

- Services need to know about each other'locations and must therefore use one of the dicovery mechanisms

- Reduced availability: both sender and receiver of a message must be available while the message is being exchanged

Broker-based messaging

A sender writes the message to the message broker, the message broker delivers it to the receiver.

Benefit:

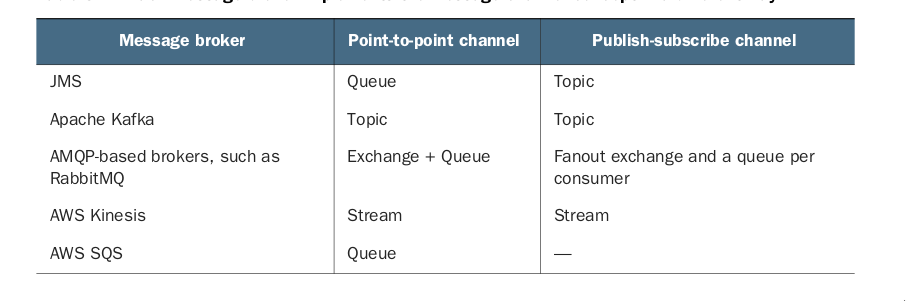

Message brokers:

Each broker makes different trade-offs. A low-latency broker might not preserve ordering. Make no guarantes to deliver messages A messaging broker that guarantees delivery and reliably stores messages on disk will probably have higher latency.

Downsides:

- Potential performance bottleneck: There is a risk that the message broker could be a performance bottleneck. Fortunately, many modern message brokers are designed to be highly scalable.

- Additional operational complexity: The messaging system is yet another system component that must be installed, configured, and operated.

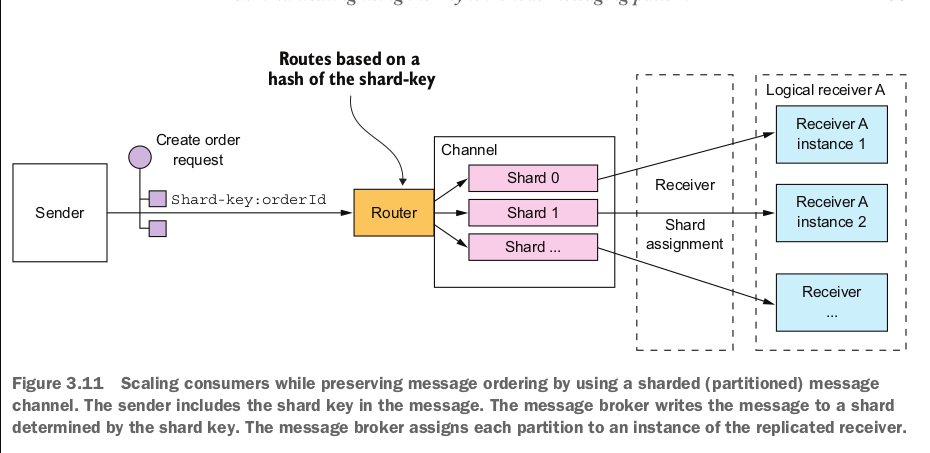

Competing receivers and message ordering

How to scale out message receivers while preseving message ordering.

It's a common requirement to have multiple instances of a service in order to process messages concurrently. Moreover, even a single service instance will probably use threads to concurrently process multiple messages. Using multiple threads and service instances increase the throughput of the application. But the challenge is ensuring that each message is processed once and in order

For example, imagine that there are three instances of a service reading from the same point-to-point channel and that a sender publishes Order Created , Order Updated ,and Order Cancelled event messages sequentially. Because of delays due to network issues or garbage collections, messages might be processed out of order.

A common solution, used by modern brokers like Apache Kafka is to use sharded channel.

- A sharded channel consists of two or more shards, each of which behaves like a channel

- The sender specifies a shard key in the message's header. The message brokers uses a shard key to assign the message to a particular shard/partition.

- The message broker groups together multiple instances of a receiver and treats them as the same logical receiver.

For exampke. each Order event message has the orderID as its shard key. Each event for a particular order is published to the same shard, which is read by a single consumer instance. As a result, these messages are guaranteed to be processed in order.

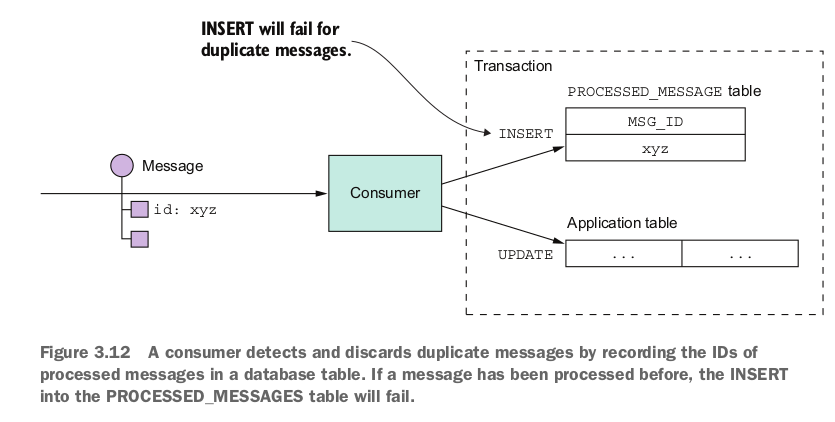

Handling duplicate messages

- A message broker should ideally deliver each message only once, but guaranteeing exactly-once messaging is usually too costly. Instead, most message brokers promise to deliver a message

at leastonce.

There are a couple of different ways to handle duplicate messages:

- Write idempotent message handlers: Application logic is idempotent if calling it multiple times with same input values has no additional effect. For instance, cancelling an already-cancelled order is an idempotent operation

- Track messages and discard duplicates:

A simple solution is for a message consumer to track the messages that it has processed using the message id and discard any duplicates.

When a consumer handles a message, it records the message id in the database table If a message is a duplicate, the INSERT will fail and the consumer can discard the message.

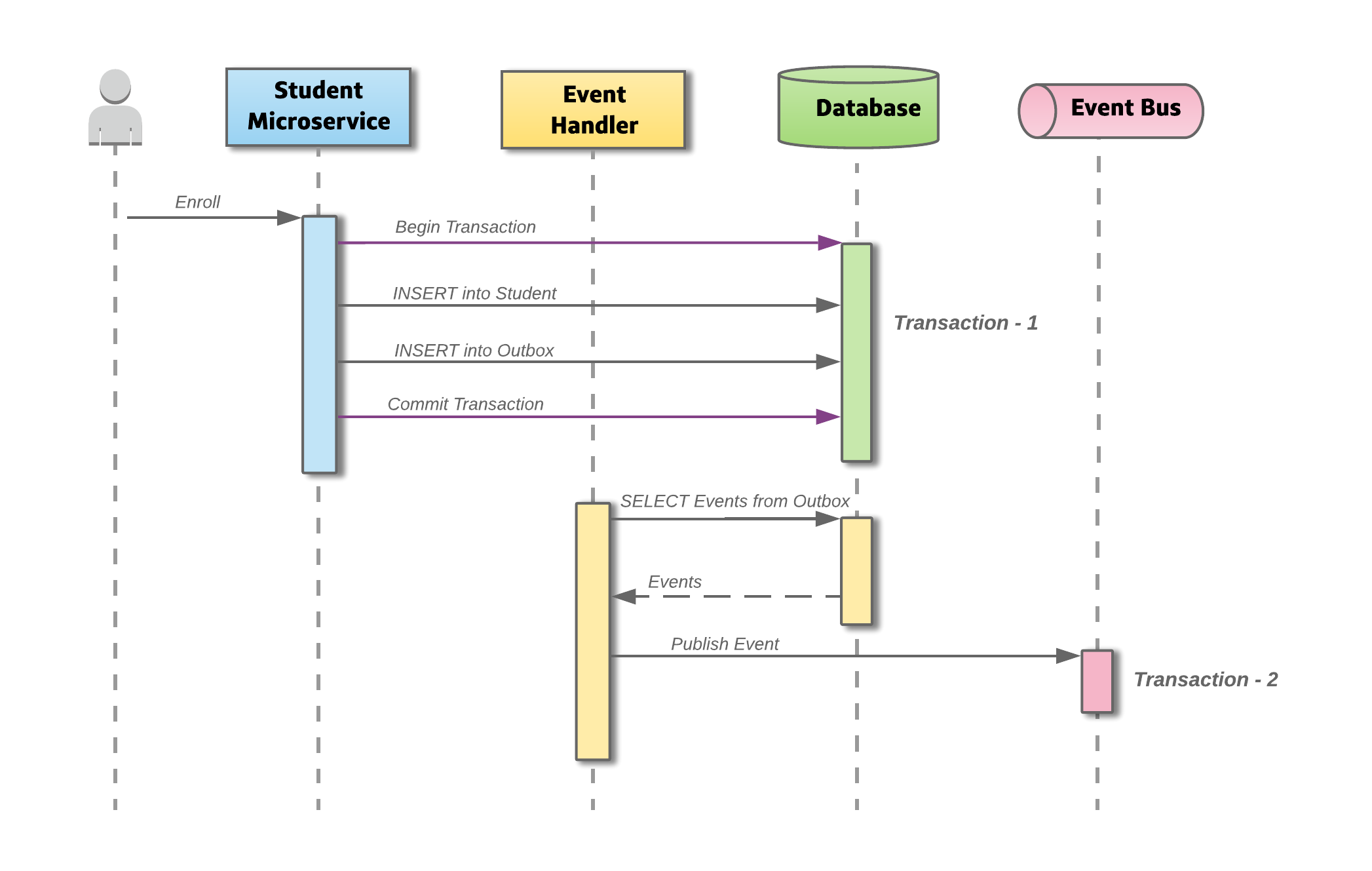

Transactional messaging

A service often needs to publish messages as part of a transaction that update the database. Both database update and the sending of message must happen within a transaction. Otherwise, a service might update the database and then crash, for example, before sending the message. If the service doesn't perform these two operations atomically, a failure could leave the system in a inconsistent state

The traditional solution is to use a distributed transaction that spans the database and the message broker. Distributed transactions aren't a good choice for modern applications. As a result, an application must use a different mechanism to reliably publish messages.

Using a database table as a message queue

Transactional outbox:

The idea of this approach is to have an “Outbox” table in the service’s database. When receiving a request for enrollment, not only an insert into the Student table is done, but a record representing the event is also inserted into the Outbox table. The two database actions are done as part of the same transaction.

An asynchronous process monitors the Outbox table for new entries and if there are any, it publishes the events to the Event Bus. The pattern merely splits the two transactions over different services, increasing reliability.

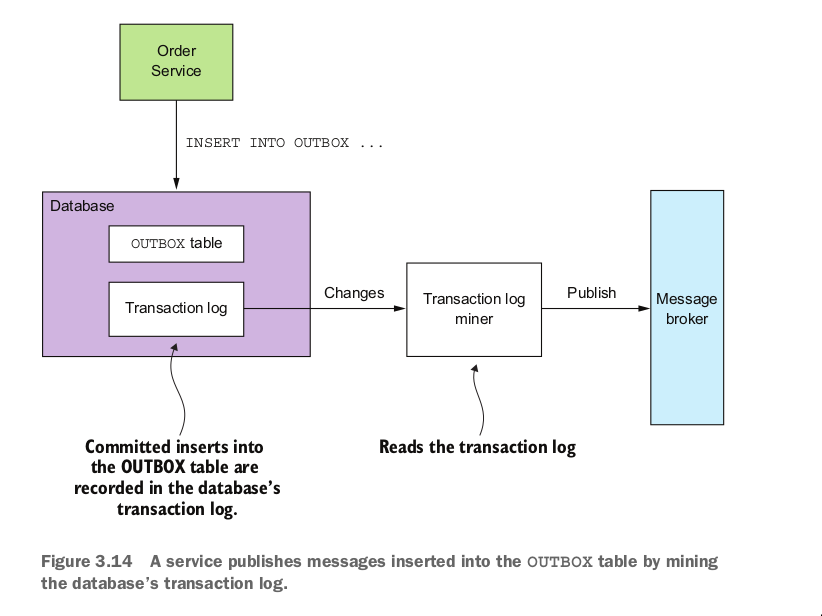

Publishing events by applying the transaction log tailing pattern

Every committed update made by an application is represented as an entry in the database’s transaction log. A transaction log miner can read the transaction log and publish each change as a message to the message broke

https://github.com/debezium/debezium

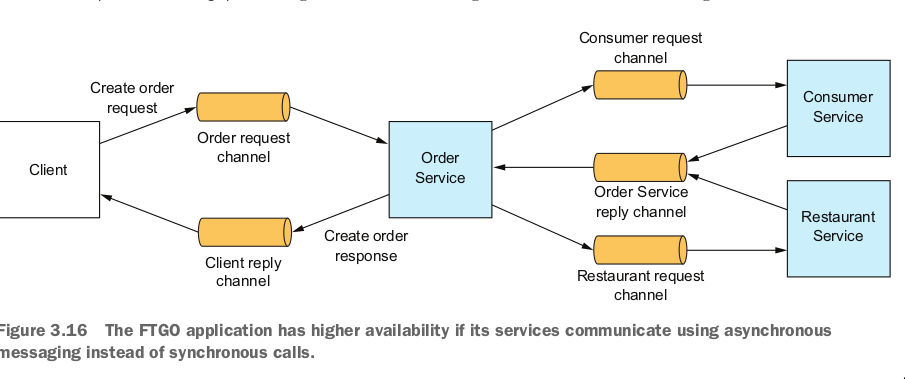

Using asynchronous messsaging to improve availability

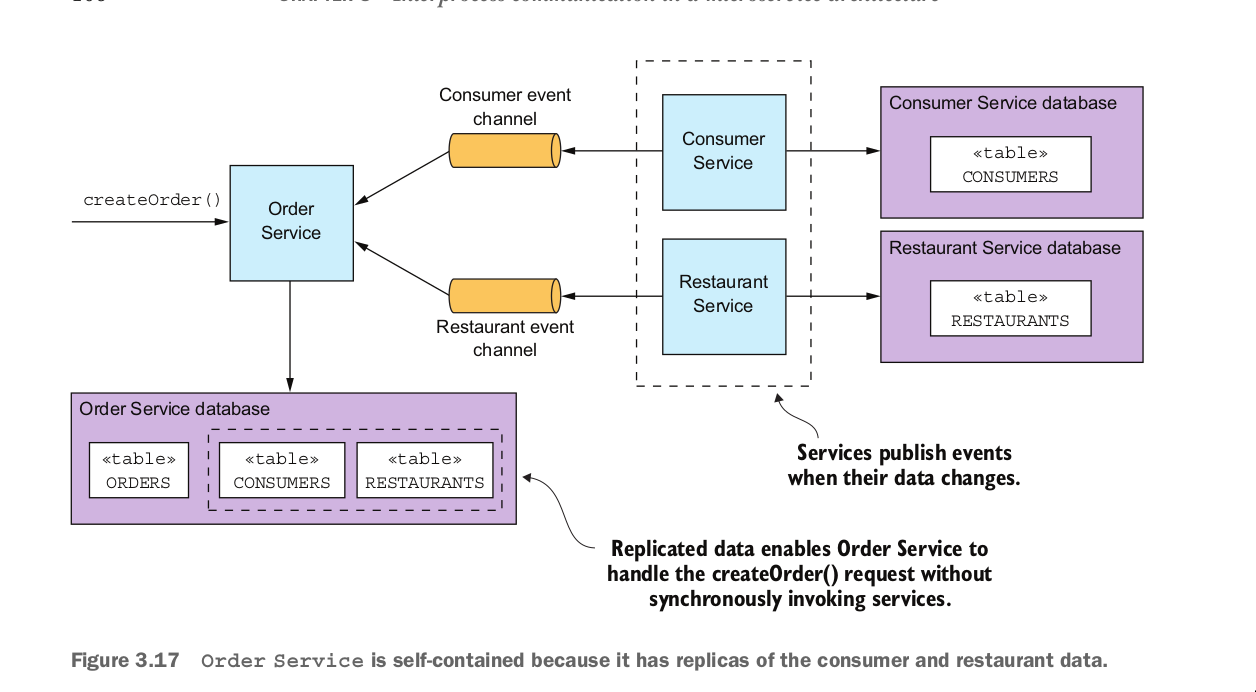

Replicate data

One way to minimize synchronous requests during request processing is to replicate data. A service maintains a replica of the data it needs when processing requests. For example, Order Service could maintain a replica of data owned by Consumer Service and Restaurant Service.

Consumer service and Restaurant Service publish events whenever their data changes. Order Service subscribe to those events and update it replica

Drawback:

Finish processing after returning a response

createOrder() operation is invoked. The sequence of events is as follows:

Order Servicecreates an Order in aPENDINGstateOrder Servicereturn a response to its clients containing the orderIDOrder Servicesends aValidateConsumerInfomessage toConsumerServiceOrder Servicesends aValidateOrderDetailsmessage to Restaurant Service .Restaurant Servicereceives aValidateOrderDetailsmessage, verifies the menu item are valid and that the restaurant can deliver to the order’s delivery address, and sends anOrderDetailsValidatedmessage to Order Service .Order ServicereceivesConsumerValidatedandOrderDetailsValidatedand changes the state of the order to VALIDATED .

Order Service can receive the ConsumerValidated and OrderDetailsValidated` messages in either order. If it receives the ConsumerValidated first, it changes the state of the

order to CONSUMER_VALIDATED , whereas if it receives the OrderDetailsValidated mes-

sage first, it changes its state to ORDER_DETAILS_VALIDATED . Order Service changes

the state of the Order to VALIDATED when it receives the other message.