Scalability bottlenecks

The C10K problem

Web servers were not able to handle more than 10,000 concurrent connections

- With each request that is currently active in the system, one thread is hogged up and competes for resources like CPU and memory with all other threads

- The increased number of threads leads to increased context switching

- Networking problems: Each new packet would have to iterate through all the 10K processes in the kernel to figure out which thread should handle the packet.

- The select/poll constructs needed a linear scan to figure out what file descriptors had an event.

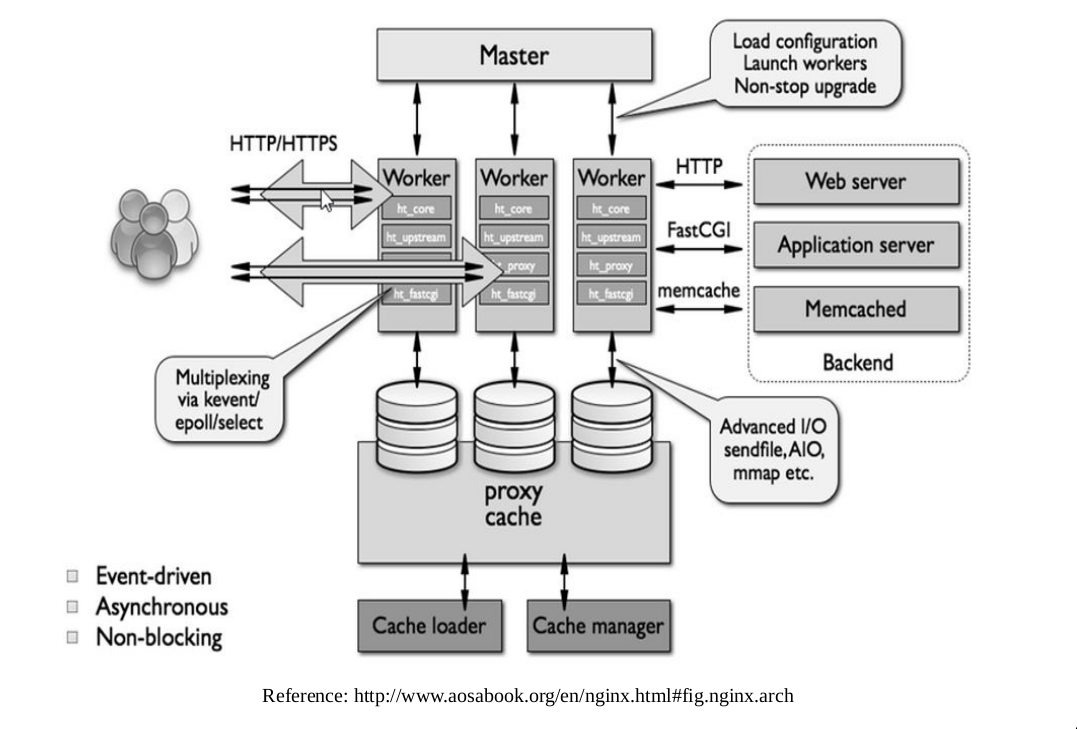

Based on this learning, web servers based on an entirely different programming model (called the eventing model) got designed. Nginx is an example:

- It is designed for high performance and extensibility. It consists of a limited set of worker processes (usually one per CPU core) that route requests using non-blocking event-driven I/O (using the non-blocking provisions of the native kernel such as epoll and kqueue )

- There are a static number of processes, typically one for each core

- Each process handles a large number of concurrent connections using asynchronous I/O and without spawning separate threads. They manage a set of file descriptors (FDs)(one for each request) and handles events on these FDs using the efficient

epollsystem call.

The Thundering Herd problem

Caches expires at the same time, resulting in a lot of simultaneous requests to the backend

One way to avoid this is to slightly randomize the cache TTLs